Predicting an Event from Audio Data

Abstract

The goal of my project was to develop an algorithm to predict an event from audio data.

Specficially: within some margin of error, I'd like to predict if a door has closed based on the sound a door makes when it closes.

This was my final project for the 66-hour Data Science course at General Assembly

The Game Plan

- Locate the data set

- Read the data into Python

- 'Clean' the data with Fast Fourier Transform and/or other audio analysis tools

- Perform Unsupervised analysis to try to define what a cluster of similarly 'shaped' data looks like with respect to frequency, time, and potentially amplitude or other variables

- Perform supervised, categorical analysis to divide the data into a series of groups based on where the data set the previously defined 'shape' fits, and what the margin of error of the fit is.

- See how well the events actually predict door closing

What actually happened:

The challenges I encountered and how I overcame them

Step 1: Locate the Data

I found a youtube video of somebody recording themselves opening and closing a door over and over for 3 hours

Step 2: Read the Data into Python/SciPy

I decided to use a python based command-line program called youtube-dl to download the audio from the youtube video.

The youtube-dl GitHub can be found here

Advantages of youtube-dl

- File Size

- One website that will download from a YouTube url to your computer estimated the audiofile of the 3-hour YouTube video would be 6 GB

- Efficiency

- The terminal will be much more efficient than using a browser to download the data

- Control

- Using youtube-dl I have more direct control over the quality of the file that is downloaded

Issue

I could only read an audio file into SciPy as a .wav file, but the audio from the youtube video is only available for download as .webm or .m4a

Resolution

youtube-dl has audio/visual post-processing functionality that can save the file being downloaded to just about any file type I need (including .wav)

Issue

youtube-dl's audio/visual post-processing functionality requires the instillation of either FFmpeg or Libav

Resolution

I chose to install FFmpeg. Since FFmpeg uses the Libav codec, an installation of FFmpeg includes Libav.

Resolution

youtube-dl has audio/visual post-processing functionality that can save the file being downloaded to just about any file type I need (including .wav)

A build of FFmpeg for just about any operating system can be downloaded from here

Step 3: 'Clean' the Data

Initially I wanted to use a comprehensive open-source C++ audio analysis library called Essentia to analyze the audio data.

Essentia includes a collection of algorithms designed around extracting all sorts of features from audio files. The library also includes Python bindings, comprehensive documentation, and even a Python tutorial.

Essentia can be downloaded here

Issue

Unfortunately I was unable to get a working install of Essentia on my computer

Resolution

SciPy also has a comprehensive library of functions that are used in audio analysis, as well as really great documentation on how to use the algorithms.

However, SciPy is not wrapped as nicely as Essentia is and the algorithms for the sort of cleaning I wanted to do on the audio data had to be written from scratch..

SciPy comes almost entirely pre-packaged in Pandas, and can be found here for direct download

Step 4: Scale Back

Issue

After reading the .wav file I created from the video of the door opening and closing, things got a little out of hand.

When you read a .wav file into python using scipy.io.wavfile.read('file_name.wav') it gives you a tuple where

The 0th location is an integer value corresponding to the sampling rate (the number of numbers per second of audio data in the file)

The 1st location is a numpy array containing a discrete numerical representation of the waveform.

In my case the numpy array consisted of arrays of two integers, each corresponding to one of two audio channels present in the .wav file. After splitting the channels I ended up with two very long arrays to look at. Specifically,

3 hours and 22 seconds of audio data at a sample rate of 44100 meant each audio channel was 477,271,040 integers long

Resolution

I decided to change the course of the project towards more of a 'proof of concept.'

I picked the 15 second slice of audio from 40 to 65 seconds to focus on.

Step 5: Strategize

One of my origional goals that I thought was very important was to try to come up with a way to have the audio data itself predict when an event was happening, as opposed to marking the timestamps of when a door was opened or closed by hand.

My New Strategy

Generate timestamps for the onset and end of each event of the type I was interested in.

Consolidate the audio data denoted by those timestamps.

Run a short term Fourier transformation (stft) on consolidated audio data to generate a frequency spectrum that might be overall characteristic of the audio event I am interested in

Perform an inverse Fourier transformation on the frequency spectrum generated in the previous step to get a characteristic shape for the audio of the door closing!

Step 6: Timestamps

First I tried to use an auto-correlation algorithm to heighten the areas in a given audio channel that were repeated. This proved marginally sucessful at best.

Issue

An auto-correlation took hours to compute for my little 15 second audio slice.

There was no way I could use the same technique on the origional array which had over 477 million integers. For each audio channel.

Resolution

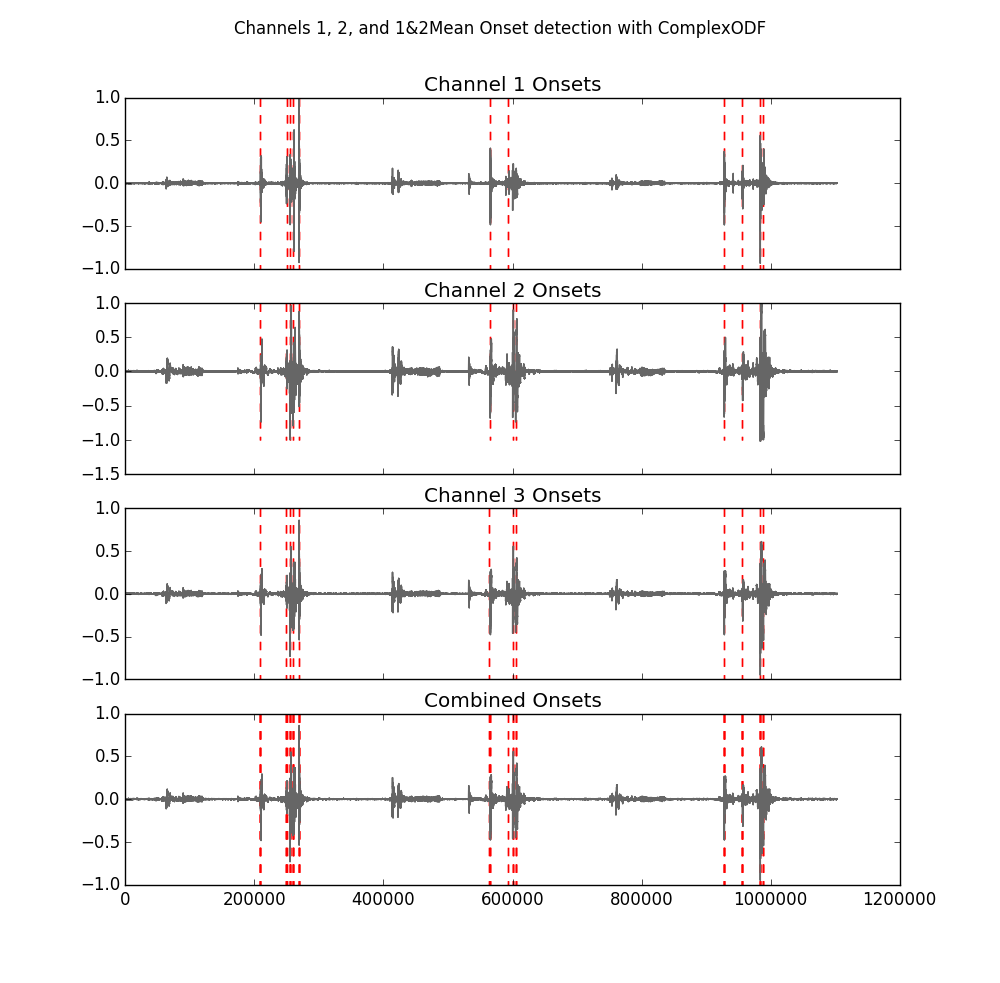

I downloaded program called Modal, which stands for Musical Onset Database And Library

Modal can be downloaded from either of the following links

Modal comes equipped with a number of methods to detect the onset of an audio event.

The two functions I found most useful for my project were the ComplexODF and LPEnergyODF.

To get timestamps for the end of the audio event, I simply flipped the array and ran the onset detection algorithms on the reversed dataset.

Download Project

My Project can be downloaded from my GitHub